A pragmatic playbook for operations and product teams: how to use LLMs for real tasks, what patterns work reliably in production, and how to avoid the common traps that turn pilots into expensive experiments.

Start from the decision, not the model

Begin by asking what specific decision or task you want to improve: triage email, draft first-pass contracts, summarise meeting notes, or extract data from documents. LLMs perform best when they are focused on a single, repeatable task with clear success criteria. Define the metric you will use to judge success before you build; this keeps pilots honest and measurable.

Use the right pattern for the job: templates, agents, and orchestration

There are three practical LLM patterns you should consider:

Prompt templates for predictable text tasks: use structured, few-shot prompts and examples to get consistent outputs; iterate on phrasing using real inputs.

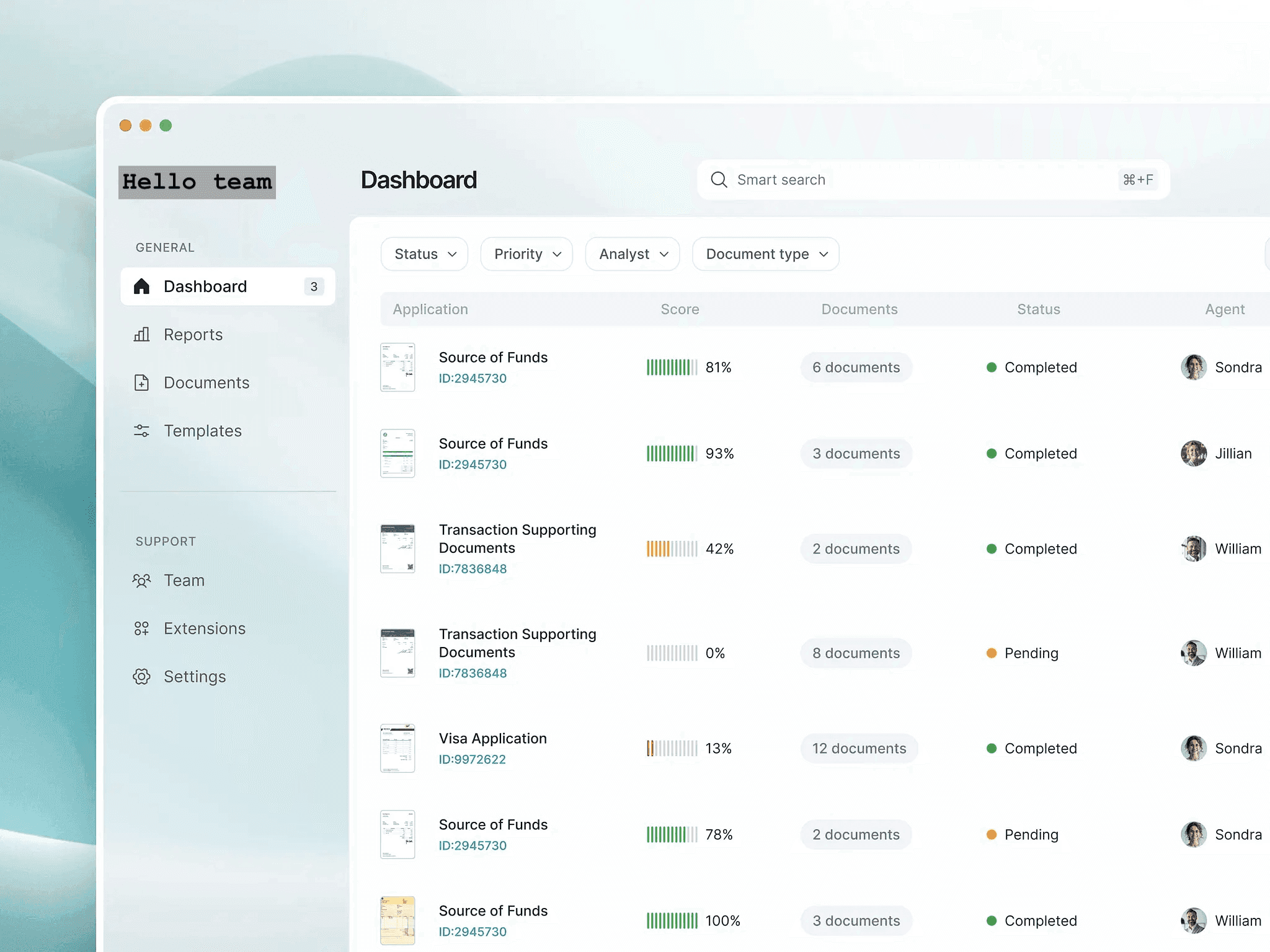

Orchestrated agents for multi-step workflows: when a task requires search, lookups or external APIs, use orchestration layers (e.g., LangChain, Orq.ai) that manage calls, caching, and retries so the LLM is not the only control plane.

LLMs behind a guarded interface for high-risk decisions: always present model outputs as suggestions with confidence signals and versioning. This hybrid approach reduces risk while preserving speed. Recent engineering patterns call this LLM orchestration or LLMOps and are proven across many deployments.

Human-in-the-loop: where to keep the human and where to automate

Retain humans where judgment, trust, or legal responsibility matter: final contract review, customer escalations, and investment decisions. Automate low-risk, high-volume work like first-draft copy, data extraction, or routine responses. Use shadow testing or "assist mode" in early rolls to compare human and model outputs before you let the model influence outcomes directly.

Data and context: the non-sexy secret to reliability

The most reliable LLM systems combine model capability with good context: document embeddings, up-to-date knowledge indexes, and controlled contextual windows. Invest in content engineering: canonicalise formats, keep reference data in queryable form, and use cached retrieval to avoid noisy hallucinations. Teams that treat context engineering as an operational discipline outperform those that only tweak prompts.

Monitoring, cost control, and drift detection

Measure throughput, latency, error rate, and downstream impact. Track token usage and cache effectively to control costs. Set up drift detection: if outputs degrade or user corrections spike, flag for retraining or prompt revision. Practical LLMOps practices include automated tests, canary releases, and rollback paths.

Tooling and LLM choices: pick fit, not hype

Choose tools that fit your team: managed APIs for speed, self-hosted models for data control, and orchestration frameworks for complex flows. Prioritise vendors with clear SLAs, data handling policies, and exportable logs. Consider vendor lock-in and how easy it is to switch a model or orchestration layer later.

A 90-day starting plan you can use tomorrow

Week 1–2: define the task, gather representative inputs, and pick a success metric.

Week 3–6: build a narrow prototype using prompt templates and retrieval; run shadow tests.

Week 7–10: introduce human-in-the-loop with monitoring, measure impact against your metric.

Week 11–12: decide to scale, pause, or iterate; if scaling, add orchestration, caching, and deployment controls. This staged approach controls risk while delivering value quickly.

© Parioni group 2026.

UEN: 202436585E